In today's rapidly evolving digital landscape, organizations must balance innovation with stability to ensure long-term success. One of the most effective strategies for achieving this balance is reducing dependency on a single AI hardware and chipset framework. By adopting a diversified AI infrastructure, businesses can minimize risks associated with system failures, vendor lock-in, and technological obsolescence while simultaneously reaching a broader customer base.

Relying solely on one AI hardware or chipset architecture presents several challenges:

Strategies for Risk Reduction in AI Hardware and ArchitecturesTo mitigate these risks, organizations should consider a multi-architecture approach by implementing the following strategies:

Expanding Customer Reach Through AI Architectural DiversityBeyond risk reduction, architectural diversification enhances an organization's ability to serve a broader customer base. A flexible, scalable AI infrastructure allows businesses to:

ConclusionAdopting a diversified AI hardware and chipset architecture is a critical strategy for businesses seeking to minimize risk and expand their market presence. By leveraging multi-vendor AI environments, modular processing strategies, and open AI standards, organizations can enhance operational resilience while delivering superior AI-powered experiences to a global customer base. In an era where agility and adaptability are paramount, reducing reliance on a single AI architecture is not only a risk-mitigation strategy but also a key driver of sustained growth and competitive advantage.

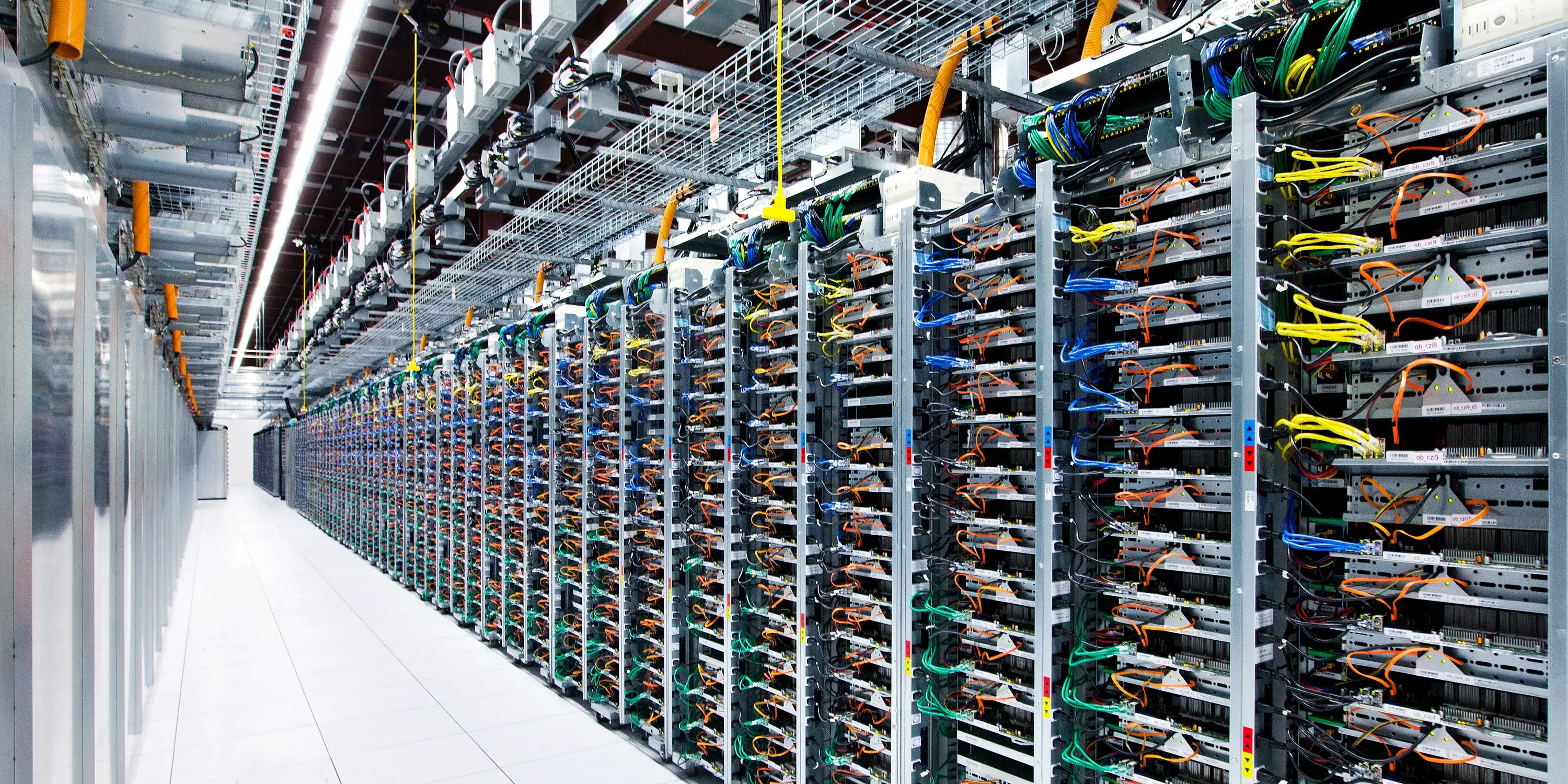

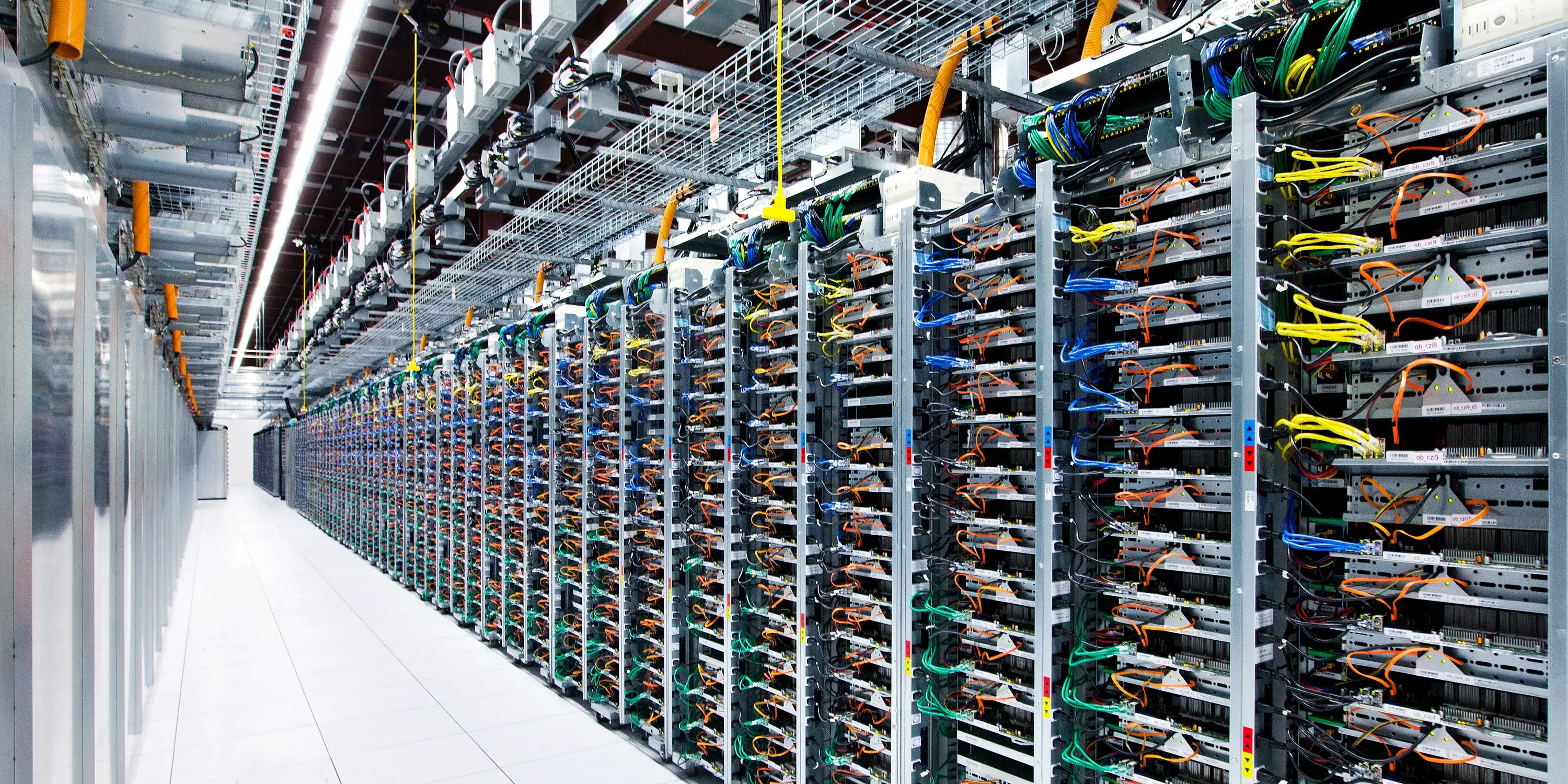

Discover how ABD can help you make the biggest impact for your AI data centers